When AI Has an Opinion on Beauty

- Francis Joseph Seballos

- Nov 19, 2025

- 1 min read

AI has an opinion on how we should look.

That might sound like science fiction, but it’s already happening every time we open our cameras, apply a filter, or upload a photo. The algorithms behind these tools aren’t neutral — they’ve been trained on millions of images to decide what “beautiful” means.

And what they’ve learned isn’t diversity. It’s compression.

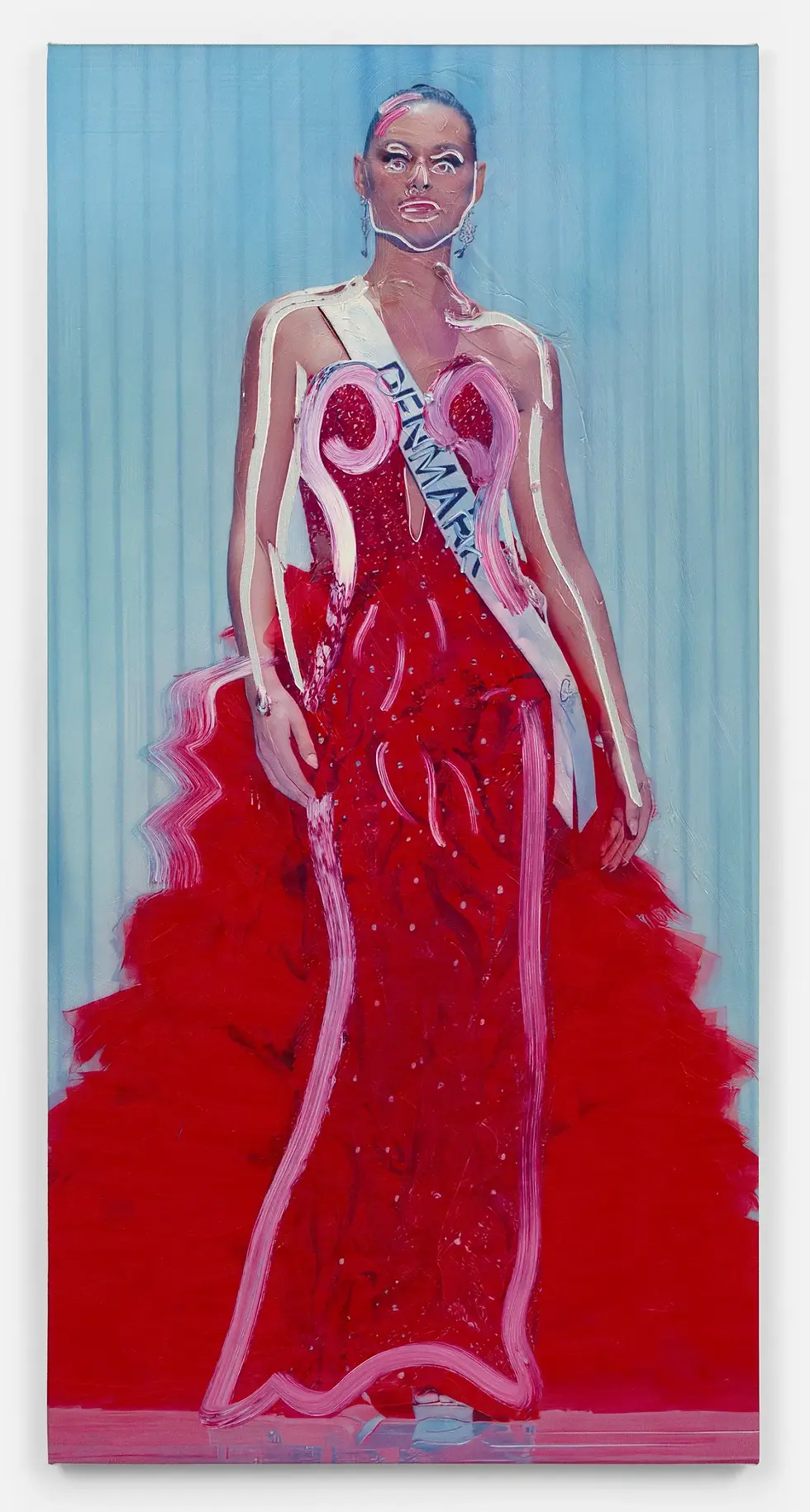

I created Facetune Portraits to make this invisible process visible — to show how technology quietly shapes what we think is attractive, acceptable, and even human. When I feed images of women from around the world into AI beauty apps, the results all start to look the same: lighter, thinner, more symmetrical, more Western.

AI doesn’t just replicate bias — it amplifies it. It’s not inventing beauty standards, it’s repeating them at scale, teaching us to desire what’s statistically rewarded.

Through Facetune Portraits, I use these distortions as material. The work becomes both evidence and protest — exposing how the language of improvement hides the erasure of individuality.

My art isn’t about rejecting technology. It’s about demanding accountability from it. If AI is going to have an opinion on beauty, then artists must have one, too — one that values complexity over control, and humanity over homogeneity.

The goal isn’t to fight the algorithm, but to reimagine it.

Comments